Introduction & purpose

This is not just a team meeting. It's an onboarding audit with teeth.

You'll walk through your current onboarding journey using the DIVE Method and the Risk Webframework to identify where small issues spiral, where assumptions live, and where you can build instrategic "stopper blocks" to prevent downstream chaos.

What you'll need

- 60–90 minutes

- A virtual or in-person room with your onboarding, CS, implementation, and support teams

- Post-its, whiteboard or Miro board, or a shared doc

- Copies of your current onboarding workflow/process (if available)

- This guide and the downloadable resources included

Pre-work: Come prepared to go deep

To get the most value from this Lunch & Learn, each participant should arrive with one recent onboarding experience in mind—preferably one that had bumps, delays, or lessons learned.

Bring the following:

- A quick summary of the onboarding project (no need to over-prepare—just know the basics: who,what, when, and where things got messy).

- 5–10 things that either

- Caused friction or delays

- Created confusion, misalignment, or tension

- Were assumed to be fine, but later turned out to be problematic

- Or simply made you say, "That shouldn't have happened."

Not sure what counts? Look for:

- A stakeholder who disappeared after kickoff

- A miscommunication between teams

- A scope or data surprise mid-onboarding

- A missed milestone that caught everyone off guard

- A process step that always feels rushed, skipped, or unclear

Pro Tip: The more honest you are about what didn't work, the more valuable this session will be.

Agenda overview

0:00–0:10 - Set the Stage – Why We're Here

0:10–0:25 - DIVE Deep: Walkthrough of the Framework

0:25–0:45 - Group Activity: Map the Risk Web

0:45–1:00 - Identify the Assumptions

1:00–1:15 - Design Your Stopper Blocks

1:15–1:30 - Debrief, Commit, and Document

Facilitation script + Step-by-Step

1. Set the stage (10 min)

"Our goal today is to take a real, honest look at our onboarding process. Not just what's documented,but how it actually plays out. We're not here to point fingers—we're here to stop the dominos."

Facilitator Notes

- Begin by sharing a brief personal story of when an onboarding went sideways

- Set psychological safety - emphasize that this is about improvement, not blame

- Explain that we're looking for patterns, not isolated incidents

Prompts for Discussion

- Where have you seen onboarding go sideways recently?

- What's a moment where you knew, "We're in trouble"—but it started small?

- If you could wave a magic wand and fix one part of our onboarding process, what would it be?

Activity: Ask each participant to write down their "first domino" moment from their pre-work example on asticky note and place it on a shared board.

2. Walk through the DIVE method (15 min)

Quick recap

D = Discover assumptions and hidden risks

I = Investigate how those risks compound

V = Validate with real data or conversations

E = Engineer resilience through small, repeatable system changes

Facilitator notes

- For each step of DIVE, provide a real example from your organization

- Connect each step to practical actions the team can take

- Show sample outcomes (e.g., "This is what a validated assumption looks like")

Prompts for discussion

- What's something we've assumed to be true in our onboarding process that we haven't confirmedlately?

- Where in our journey are we operating on "I think" instead of "I know"?

- Which part of our process has the most "it depends" answers?

Activity

Have the group brainstorm one example for each DIVE component based on their collectiveexperience:

- One assumption they need to discover

- One risk connection they need to investigate

- One belief they need to validate

- One process they need to engineer for resilience

3. Risk web activity (20 min)

Instructions

- Choose one recent onboarding project from the pre-work examples

- Draw one issue in the center (e.g., "Late kickoff")

- Then draw out all the ways that impacted other parts of the onboarding

- Look for loops: Where did one issue feed another?

Facilitator notes

- Use a whiteboard or digital collaboration tool

- Draw lines between connected issues

- Circle areas where multiple issues connect (these are high-impact intervention points)

- Look for places where small issues created disproportionate impacts

Prompts for discussion

- What was the first domino in this project?

- What consequences snuck up on us later?

- Which dependencies caused the most downstream issues?

- Where did we lack visibility into brewing problems?

Activity

Split into smaller groups if you have more than 8 participants. Each group maps one risk web, thenpresents their top 3 insights to the larger group.

4. Identify assumptions (15 min)

"Listen for phrases like: 'We usually…' 'They should…' 'I assume…' 'That's always…' Those are flags."

Facilitator notes

- Distinguish between facts, expectations, and assumptions

- Highlight how assumptions become "invisible" over time

- Focus on high-impact assumptions (those that affect multiple steps)

Group reflection

- Where do we have too much confidence and not enough confirmation?

- What risks have we normalized as "just part of onboarding"?

- What do we believe about our customers that might not be universally true?

- Where are we assuming alignment that hasn't been explicitly confirmed?

Activity

Create an "Assumption Inventory" from the Risk Web:

- List all assumptions identified in the activity

- Rate each on a scale of 1-5 for:

- Confidence (how sure are we?)

- Impact (how much does it matter?)

- Validation ease (how easily can we confirm it?)

- Circle the high-impact, low-confidence assumptions for immediate follow-up

5. Design stopper blocks (15 min)

Instructions

- Now that we've spotted the dominos, how do we stop them?

- What checkpoints, alignment calls, questions, or playbook updates could prevent this risk fromhappening again?

Facilitator notes

- Focus on practical, realistic interventions

- Distinguish between one-time fixes and systemic improvements

- Consider both process changes and communication strategies

- Remember: good stopper blocks are specific, measurable, and scalable

Prompts for discussion

- Where could we validate earlier?

- What moments could become standard checkpoints?

- What's one stopper block we'll commit to adding this quarter?

- How might we make validation a habit rather than an exception?

Activity

For each high-priority risk identified, design one concrete stopper block using this format:

- Risk: [Describe the domino]

- Current process: [What happens now]

- Proposed stopper block: [New checkpoint or process]

- Owner: [Who will implement]

- Success measure: [How we'll know it worked]

6. Debrief and document (15 min)

Facilitator notes

- Summarize key insights from each section

- Focus on actionable takeaways

- Assign clear ownership for follow-up items

- Set expectations for how these insights will be integrated into existing processes

Group close-out prompts

- What's one risk we'll never overlook again?

- What's one process improvement we can make scalable today?

- How do we make sure we never say "it depends" again without documenting the conditions?

- What surprised you most about today's discussion?

Activity

Each participant commits to one concrete action they'll take in the next week to implement a stopperblock or validate an assumption.

Jasmine's rule: "If your process depends on the customer being easy, it's not a process—it's agamble."

What to do after the lunch & learn

- Assign owners to finalize new stopper blocks or process changes

- Add new insights to onboarding documentation or playbooks

- Plan to revisit your risk web quarterly or after every major post-mortem

- BONUS: Turn this into part of onboarding for new CSMs—teach risk from day one

30-day follow-up plan:

- Week 1: Document all stopper blocks and distribute to the team

- Week 2: Implement top 3 priority stopper blocks

- Week 3: Validate top 5 assumptions identified

- Week 4: Review early results and adjust as needed

Success metrics:

- Reduction in "surprise" issues during onboarding

- Decreased time spent on firefighting

- Improved time-to-value for customers

- More predictable onboarding timelines

- Clearer handoffs between teams

Add-on resources

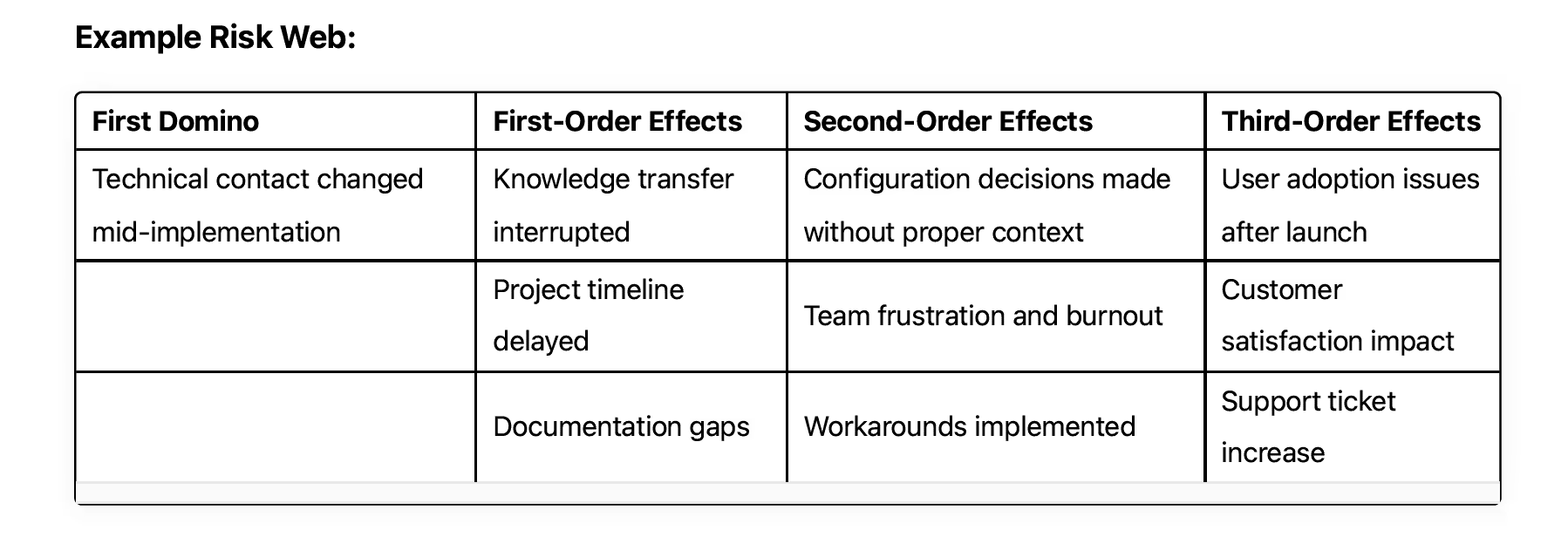

Domino risk mapping template

Instructions: Use this template to visually map how risks cascade through your onboarding process.Start with one trigger event and trace all possible impacts.

How to use this template:

- Identify the trigger (First domino)

- Write a specific onboarding issue in the center circle

- Example: "Technical contact changed mid-implementation"

- Map first-order effects

- What immediately happens as a result?

- Draw these in the inner ring

- Example: "Knowledge transfer interrupted" or "Project timeline delayed"

- Map second-order effects

- What happens because of those first-order effects?

- Draw these in the middle ring

- Example: "Configuration decisions made without proper context"

- Map third-order effects

- What are the long-term or final impacts?

- Draw these in the outer ring

- Example: "User adoption issues after launch"

- Identify connection points

- Draw lines between related effects

- Highlight loops where effects feed back into each other

- Circle points where multiple effects converge (high-leverage intervention points)

- Prioritize intervention points

- Mark places where a simple check or process could break the chain

- Focus on early-stage interventions that prevent multiple downstream effects

- Mark places where a simple check or process could break the chain

Stopper block opportunities

- Require backup technical contact from day one

- Create knowledge transfer checklist for contact transitions

- Implement configuration review checkpoint after personnel changes

DIVE implementation checklist

D - Discover assumptions

Questions to ask:

- What are we assuming about the customer's:

- Technical environment?

- Team structure and availability?

- Decision-making process?

- Success metrics?

- Internal priorities?

- What parts of our process do we describe with "it depends"?

- Where do we have the least visibility during onboarding?

- What have we labeled as "one-off issues" more than once?

Actions to take:

- Review the last 3-5 onboardings for common friction points

- Ask team members to list their top 3 onboarding anxieties

- Identify steps with the most variance in time or quality

- Document all conditions behind "it depends" scenarios

I - Investigate connections

Questions to ask:

- Which step delays have the biggest ripple effects?

- Where do we see the same issue manifesting in different ways?

- Which teams or people are consistently impacted by upstream issues?

- What problems tend to cluster together?

Actions to take:

- Map dependencies between onboarding steps

- Identify which teams are affected by delays in each step

- Calculate the average impact (in days) of common delays

- Trace one major issue backward to find root causes

V - Validate with data

Questions to ask

- How do we know this assumption is true?

- What evidence contradicts our current belief?

- Who could confirm or deny this assumption?

- What would change if this assumption were false?

Actions to take

- Schedule validation conversations with key stakeholders

- Review data from your CRM or project management system

- Create simple yes/no validation questions for kickoff meetings

- Establish metrics to track assumption accuracy over time

E - Engineer resilience

Questions to ask

- What simple check would have caught this early?

- How can we make this validation automatic?

- What's the minimum viable process to prevent this issue?

- Who needs visibility they don't currently have?

Actions to take

- Design checkpoints at high-risk transition points

- Create fallback plans for common failure scenarios

- Build templatized questions for validation meetings

- Set up automatic alerts for key risk indicators

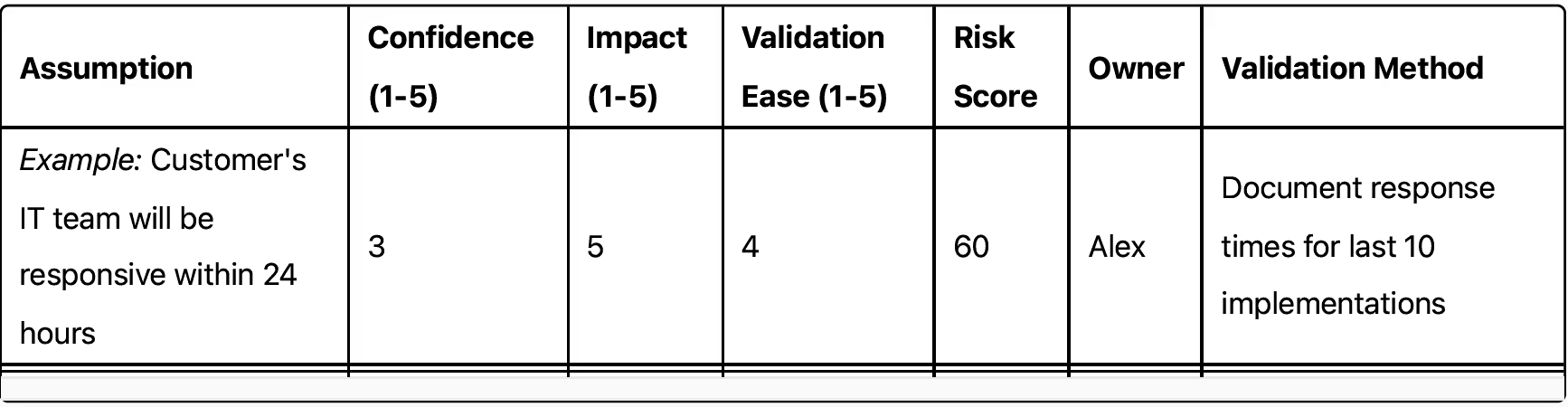

Assumption audit template

How to use this template

- List all assumptions surfaced during your discussion

- Rate each assumption on the three scales

- Calculate the Risk Score (multiply the three ratings)

- Prioritize validating assumptions with the highest scores

"It Depends" trigger words

When you hear these phrases, document the assumption:

- "Usually..."

- "Typically..."

- "Most customers..."

- "They should know..."

- "That's just how it works..."

- "I'm sure they'll..."

- "That rarely happens..."

- "We can figure it out later..."

- "I think..."

- "Hopefully..."

Validation methods:

- Direct question: Ask the customer explicitly

- Data analysis: Review past onboarding metrics

- Process test: Run a small test of the process

- Expert consultation: Check with subject matter experts

- Documentation review: Check for written confirmation

- Scenario planning: Test what happens if the assumption is false

Common high-risk assumptions to validate:

- Customer stakeholders have internal alignment

- Technical requirements are fully understood

- The customer has allocated sufficient resources

- Customer timeline expectations match reality

- All necessary data is accessible and clean

- The customer understands their responsibilities

- Internal teams have capacity for the implementation

- The scope is clear and agreed upon by all parties

Deep-dive question bank

Use these questions throughout your onboarding process to uncover hidden risks and validateassumptions. They're organized by onboarding phase to help you identify and address issues at the right time.

Pre-kickoff questions

Stakeholder mapping:

- "Beyond the people in this meeting, who else needs to be involved for this project to succeed?"

- "Who can say 'no' to this project who isn't in the room right now?"

- "Who will be using the system day-to-day that we should hear from?"

- "How are decisions typically made for projects like this at your organization?"

- "What's the approval process for unexpected changes or scope adjustments?"

Success definition:

- "If we were to meet a year from now, what would make you say this implementation wassuccessful?"

- "How will this implementation be measured internally at your organization?"

- "What concerns do your executives have about this project?"

- "What previous implementation went really well, and why?"

- "What's the one thing that absolutely cannot go wrong in this implementation?"

Resource reality check:

- "What other major initiatives is your team working on during our implementation timeline?"

- "How much time per week can each of your team members realistically dedicate to this project?"

- "What's your process for prioritizing this project if competing priorities emerge?"

- "What skills or knowledge gaps on your team are you concerned about?"

- "How comfortable is your team with the pace we've outlined?"

Kickoff questions

Technical landscape:

- "What systems will our solution need to integrate with?"

- "Where do you anticipate potential technical complications?"

- "What data quality issues have you encountered in past projects?"

- "How standardized are your processes across departments/locations?"

- "What technical constraints should we be aware of from the start?"

Timeline validation:

- "What dates on our timeline align with other critical events at your company?"

- "Which milestones feel most at risk based on your experience?"

- "What would need to happen for us to accelerate any parts of this timeline?"

- "Where should we build in extra buffer time based on your past implementations?"

- "How quickly can your team typically turn around feedback or approvals?"

Risk anticipation:

- "What's gone wrong in previous implementations that we should avoid?"

- "What dependencies are you most concerned about?"

- "Where do you think we're being too optimistic in our planning?"

- "What's the biggest risk to this project's success from your perspective?"

- "What assumptions are we making that we should validate right now?"

Mid-Implementation questions

Progress reality check:

- "On a scale of 1-10, how confident are you that we'll meet our next milestone?"

- "What's one thing that's working better than expected? What's one thing that's morechallenging?"

- "What concerns aren't being discussed in our regular status meetings?"

- "Where do you feel we need more clarity or documentation?"

- "What's one thing we could change about our implementation approach right now?"

Stakeholder alignment:

- "How are you communicating our progress to your leadership team?"

- "What feedback are you hearing from stakeholders who aren't directly involved?"

- "Has anything changed in your organizational priorities since we started?"

- "Are all the right people still engaged at the level we need?"

- "What competing priorities have emerged since we kicked off?"

Technical validation:

- "What discoveries have you made about your data or systems that we should address?"

- "Are our initial assumptions about integration points still accurate?"

- "What parts of the configuration need more testing or validation?"

- "Where are you seeing potential gaps between our solution and your requirements?"

- "What technical decisions would you like to revisit?"

Pre-launch questions

Readiness assessment:

- "What keeps you up at night about going live?"

- "On a scale of 1-10, how prepared do your end users feel?"

- "What would make you want to delay the launch?"

- "What's your backup plan if something critical doesn't work at launch?"

- "What metrics will you be watching most closely in the first week after launch?"

User preparation:

- "How confident are you in your team's ability to use the new system on day one?"

- "What resistance points have you identified among end users?"

- "Where do you anticipate the most questions or support needs?"

- "How prepared is your internal support team to handle initial issues?"

- "What additional training or documentation would be valuable before we go live?"

Transition planning:

- "How will you manage the transition from old to new systems?"

- "What business processes need to change alongside this implementation?"

- "Who will be your internal champions after launch?"

- "What's your communication plan for announcing the change?"

- "How will you gather and address feedback in the first 30 days?"

Post-launch questions

Success verification:

- "What's working better than expected? What's more challenging?"

- "Are you seeing the business outcomes you anticipated? Why or why not?"

- "What feedback are you getting from your leadership team?"

- "What metrics have improved since implementation? Which haven't?"

- "What would you do differently if you could restart this implementation?"

Adoption assessment:

- "Where are you seeing resistance or low adoption?"

- "What workarounds are users creating instead of using the new system?"

- "What feature gaps have users identified?"

- "Which teams are having the most success with the new system? What are they doingdifferently?"

- "What additional training or resources would improve adoption?"

Continuous improvement:

- "What processes could we optimize now that the system is live?"

- "What's the next area of opportunity you want to focus on?"

- "What unexpected benefits have you discovered?"

- "What documentation or training needs updating based on actual usage?"

- "What would make this system even more valuable to your organization?"

Remember Jasmine's rule: "If your process depends on the customer being easy, it's not a process—it's a gamble."

.webp)